[Review] Immersive Hackathon 2022

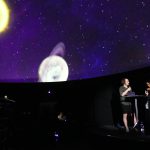

After the successful start last fall, the Immersive Hackathon took place for the second time last weekend – this time under the motto “Metaversed”. From June 17-18, twenty XR developers and planetarium enthusiasts had the chance to tinker, develop, test and get creative for a whole night.

The teams had 24 hours to organize themselves in their groups, to program a prototype and to prepare their presentation. By Friday afternoon, the participants had already gotten to know each other, pitched their individual ideas and visions, and formed teams. In the end, five teams between three and six members competed against each other.

At 3 p.m. on Saturday, it was the end of coding & the start of the jury tour in the premises of the Kunstmuseum Bochum, where the teams had locked themselves in with their technology for a night. The jury, consisting of Prof. Dr. Susanne Hüttemeister (Director Zeiss Planetarium Bochum), Laura Saenger Pacheco (VR specialist, director and fulldome enthusiast), Thomas Riedel (journalist & podcaster) and Prof. Dr. Detlef Gerhard (Chair of Digital Engineering at Ruhr University Bochum), evaluated the projects according to the five evaluation criteria: Potential, Creativity, Degree of Innovation, Quality Prototype, Presentation/Pitch.

This year, however, not only the jury was allowed to give an evaluation. The 5-minute presentation in the dome in front of about 100 people counted with 50% weighting to the important hurdle. Digitally, the audience could vote for their favorite team.

Overall, both the jury and the audience were amazed at the quality of the presentations and prototypes in such a short time. The organizers from Zeiss Planetarium Bochum were also highly satisfied. The technical director Tobias Wiethoff praised the cooperation with Places: “Like last year, the Places team and mxr storytelling organized and executed the Immersive Hackathon with great attention to detail and a high level of professionalism. We can build on this result and target the 100th planetarium anniversary next year.”

The team form follows functions with their application Shape & Color won the 1st place and more than 2000 €. MetaView – Episode: Deep Sea won 1000 € on the 2nd place and Team SOULPLANET was happy about the 3rd place and 500 € prize money. But Team Wurmloch and Planet B did not have to go home without a prize either: the Freundeskreis Planetarium Bochum e.V. provided an additional 250 € per team.

We continue to work on how to unite the worlds of XR and Dome – for this purpose, a Discord channel was created during the Immersive Hackathon, to which all interested parties are cordially invited.

MetaView - Episode: Deep Sea

Finn Lichtenberg | visual coder / 3D artist

Vesela Stanoeva | Storytelling / Sound Designer

Elisa Drache | 3D Artist / Grading Post Processing

Ali Hackalife | Sound Designer / 3D-Artist

Michael Cegielka | World Designer / Visual Coder

Short description of the project

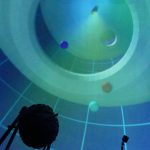

Idea | Content

The META VIEW project, created in the last 24 hours, is a new way to bring the metaverse into the planetarium. The Head Mounted Display is used as an immersive and interactive presentation tool to play on the dome. This allows the tour guide and audience to immerse themselves in a metaverse together.

In the first episode, we swim amidst particles that we can easily perceive. We are in dimensions we can’t imagine to tell stories about what is real, what is possible, and what it might mean to live underwater.

How was what used?

Hardware | Software

We implemented the modeling of the 3D objects in Blender and textured it in Substance Painter, again beaconing it in Blender. From there, the pipeline went into Unreal Engine for the final shading, including animated effects. We also generated the interactions in the engine. Then post processing gave the final touches. The presenter uses a head-mounted display (HTC-Vive) to guide the audience through the metaverse. The audience is located in a bubble in the metaverse and can be taken in any direction.

Wormhole - A LIVE view into another universe

Michael Koch | Planetarium, FFmpeg

Maks Hytra | Augmented Reality – SparkAR

Raphael Ntumba | Ideation

Viktor Waal | Augmented Reality, Pitch

Short description of the project

Idea | Content

A wormhole is inserted into a 360° background video. A wormhole is a hypothetical connection of our space to another point in space, to another time in the past or future or to a completely different universe. We do not know if wormholes really exist. In any case, they do not contradict the laws of physics. In our simulation the content of the

In our simulation, the content of the other end of the wormhole comes in real time from a 360° camera, which means that the visitors of the planetarium can interactively see themselves in the wormhole. In the second part, a wormhole is implemented as an Instagram filter for smartphone AR via SparkAR and made available for users to try out on their own smartphones.

How was what used?

Hardware | Software

Windows PC with Ricoh Theta 360° camera,

FFmpeg script,

Smartphone AR via SparkAR as Instagram filter

Planet B

Damian T. Dziwis | composition, media art, immersive media engineer

Rita Below | communication design, 3D design, programming

Alexander Filuk | cross platform, app, web and game development, sensor based interaction

Short description of the project

Idea | Content

Planet B – the Plan B Planet of the Planetarium Bochum. An immersive alien planet with audiovisual elements, generated by an artificial intelligence. Planet B invites to a digital encounter on a web-based virtual platform, which is not limited to the Planetarium Bochum with its 360° videosound audio performance, but also connects the audience on site as well as people from all over the world in a metaverse. Through VR and AR systems, visitors:inside can create the planet together and breathe life into audiovisual objects through exclusive control in the planetarium.

How was what used?

Hardware | Software

Rendering computer, dome projection,

Ambisonics-3D audio playback, Metaverse

Web server, VR/AR/Mobile compatible,

Mobilejoypad.com, TH Cologne IVES – Rendering

engine, Blender, generative evolutionary

AI networks

SOULPLANET

Julian Bringezu

Sören Stölting

Olena Ronzhes

Jewgeni „Jeff“ Birkhoff

Imad el Khechen

Steffen Junginger

Short description of the project

Idea | Content

Planets have souls. They pulsate alive or drift seemingly dead through the endless black. Their surfaces are fluid or yet fissured and riddled with scars.

They have unique personalities, just like us. Our vision: In the future, everyone at the Planetarium Bochum will have the opportunity to discover, visualize and share their unique personality in the form of a planet using our application. Psychological questions and answers stimulate visual characteristics of the planet.

How was what used?

Hardware | Software

Domemaster

Unity

Nuendo

Shape & Color

by form follows functions

Michel Görzen | Elektrotechnik + Mikrokontroller

Cora Braun | Planetarium + Immersive Medien

Manno Ludzuweit | VR-Development + Planetarium

Joris Willrodt | VR-Development + Planetarium

Oliver Werner | Immersive Medien

Short description of the project

Idea | Content

Shape & Color is an interactive planetarium experience in which the audience has to solve small puzzles together. While they are trapped in a futuristic synthwave tube, discs with differently positioned holes fall down on them. Using trackers passed around the audience area, virtual objects on the dome can be controlled and navigated through the holes. Points can be earned for each object successfully navigated through one of the holes. The higher the score, the higher the group’s ranking in the Hall of Fame. The goal of the application is to create a shared reality in which all audience members can participate with small interactions.

How was what used?

Hardware | Software

Hardware: 4 Lighthouses 2.0; 4 HTC Vive Trackers 3.0; Valve Index VR goggles (only passive in sleep mode)

Software: Unity 2021.4.3.f; SteamVR; Blender; Affinity Designer.

The trackers can be moved within the tracking area of the Lighthouses; moving the trackers is the only input option for the audience to make the usability of the application as simple as possible. Using Unity and Steam VR, the tracking data is processed and the virtual environment is projected onto the dome as DomeView.